"MiSO: Optimizing brain stimulation to create neural population activity states", Minai et al 2024

submitted by /u/gwern

[link] [comments]

With insect-like speed and agility, the tiny robot could someday aid in search-and-rescue missions.

( 8

min )

With insect-like speed and agility, the tiny robot could someday aid in search-and-rescue missions.

( 8

min )

Macro, a modeling tool developed by the MIT Energy Initiative, enables energy-system planners to explore options for developing infrastructure to support decarbonized, reliable, and low-cost power grids.

( 7

min )

Macro, a modeling tool developed by the MIT Energy Initiative, enables energy-system planners to explore options for developing infrastructure to support decarbonized, reliable, and low-cost power grids.

( 7

min )

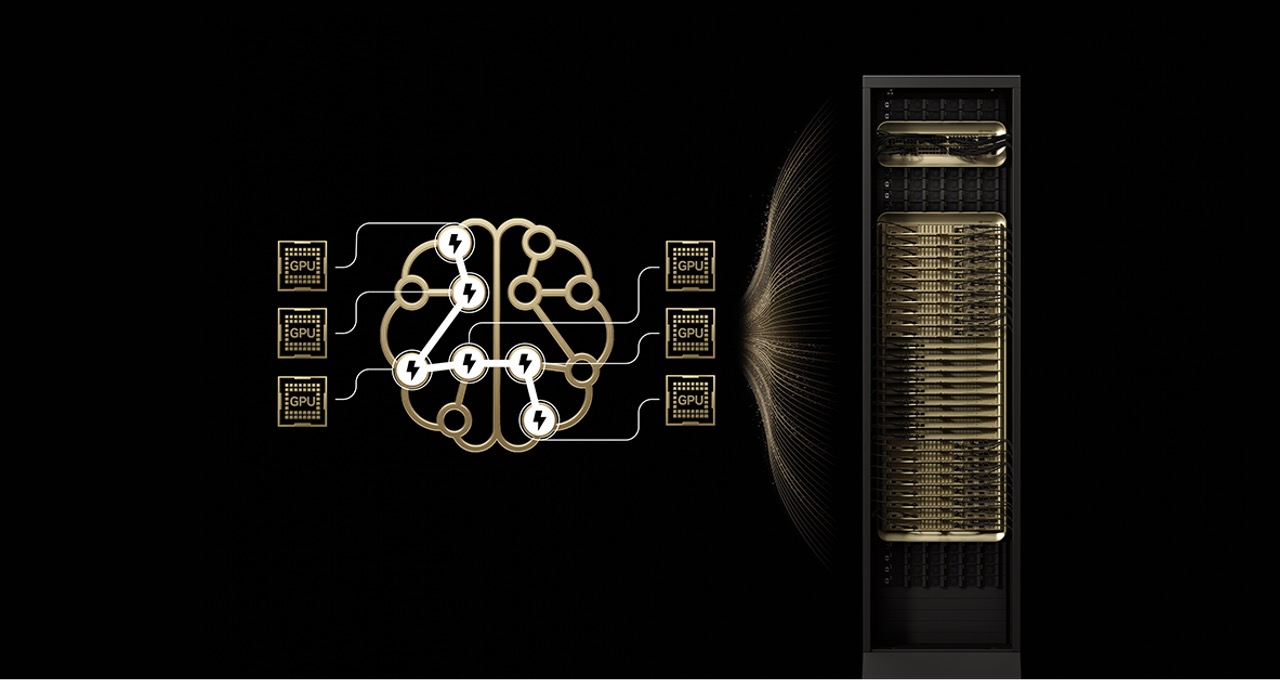

The top 10 most intelligent open-source models all use a mixture-of-experts architecture. Kimi K2 Thinking, DeepSeek-R1, Mistral Large 3 and others run 10x faster on NVIDIA GB200 NVL72. A look under the hood of virtually any frontier model today will reveal a mixture-of-experts (MoE) model architecture that mimics the efficiency of the human brain. Just

Read Article

( 10

min )

The top 10 most intelligent open-source models all use a mixture-of-experts architecture. Kimi K2 Thinking, DeepSeek-R1, Mistral Large 3 and others run 10x faster on NVIDIA GB200 NVL72. A look under the hood of virtually any frontier model today will reveal a mixture-of-experts (MoE) model architecture that mimics the efficiency of the human brain. Just

Read Article

( 10

min )

arXiv:2512.02386v1 Announce Type: new

Abstract: This paper studies the problem of risk-sensitive reinforcement learning (RSRL) in continuous time, where the environment is characterized by a controllable stochastic differential equation (SDE) and the objective is a potentially nonlinear functional of cumulative rewards. We prove that when the functional is an optimized certainty equivalent (OCE), the optimal policy is Markovian with respect to an augmented environment. We also propose \textit{CT-RS-q}, a risk-sensitive q-learning algorithm based on a novel martingale characterization approach. Finally, we run a simulation study on a dynamic portfolio selection problem and illustrate the effectiveness of our algorithm.

( 2

min )

arXiv:2512.02386v1 Announce Type: new

Abstract: This paper studies the problem of risk-sensitive reinforcement learning (RSRL) in continuous time, where the environment is characterized by a controllable stochastic differential equation (SDE) and the objective is a potentially nonlinear functional of cumulative rewards. We prove that when the functional is an optimized certainty equivalent (OCE), the optimal policy is Markovian with respect to an augmented environment. We also propose \textit{CT-RS-q}, a risk-sensitive q-learning algorithm based on a novel martingale characterization approach. Finally, we run a simulation study on a dynamic portfolio selection problem and illustrate the effectiveness of our algorithm.

( 2

min )

arXiv:2512.02489v1 Announce Type: new

Abstract: I present an application of established machine learning techniques to NHANES health survey data for predicting diabetes status. I compare baseline models (logistic regression, random forest, XGBoost) with a hybrid approach that uses an XGBoost feature encoder and a lightweight multilayer perceptron (MLP) head. Experiments show the hybrid model attains improved AUC and balanced accuracy compared to baselines on the processed NHANES subset. I release code and reproducible scripts to encourage replication.

( 2

min )

arXiv:2512.02489v1 Announce Type: new

Abstract: I present an application of established machine learning techniques to NHANES health survey data for predicting diabetes status. I compare baseline models (logistic regression, random forest, XGBoost) with a hybrid approach that uses an XGBoost feature encoder and a lightweight multilayer perceptron (MLP) head. Experiments show the hybrid model attains improved AUC and balanced accuracy compared to baselines on the processed NHANES subset. I release code and reproducible scripts to encourage replication.

( 2

min )

arXiv:2512.02527v1 Announce Type: cross

Abstract: Traditional language models face a challenge from hallucinations. Their very presence casts a large, dangerous shadow over the promising realm of natural language processing. It becomes crucial to understand the various kinds of hallucinations that occur nowadays, their origins, and ways of reducing them. This document provides a concise and straightforward summary of that. It serves as a one-stop resource for a general understanding of hallucinations and how to mitigate them.

( 2

min )

arXiv:2512.02527v1 Announce Type: cross

Abstract: Traditional language models face a challenge from hallucinations. Their very presence casts a large, dangerous shadow over the promising realm of natural language processing. It becomes crucial to understand the various kinds of hallucinations that occur nowadays, their origins, and ways of reducing them. This document provides a concise and straightforward summary of that. It serves as a one-stop resource for a general understanding of hallucinations and how to mitigate them.

( 2

min )

arXiv:2508.13963v2 Announce Type: replace

Abstract: In this paper we propose two algorithms in the tabular setting and an algorithm for the function approximation setting for the Stochastic Shortest Path (SSP) problem. SSP problems form an important class of problems in Reinforcement Learning (RL), as other types of cost-criteria in RL can be formulated in the setting of SSP. We show asymptotic almost-sure convergence for all our algorithms. We observe superior performance of our tabular algorithms compared to other well-known convergent RL algorithms. We further observe reliable performance of our function approximation algorithm compared to other algorithms in the function approximation setting.

( 2

min )

arXiv:2508.13963v2 Announce Type: replace

Abstract: In this paper we propose two algorithms in the tabular setting and an algorithm for the function approximation setting for the Stochastic Shortest Path (SSP) problem. SSP problems form an important class of problems in Reinforcement Learning (RL), as other types of cost-criteria in RL can be formulated in the setting of SSP. We show asymptotic almost-sure convergence for all our algorithms. We observe superior performance of our tabular algorithms compared to other well-known convergent RL algorithms. We further observe reliable performance of our function approximation algorithm compared to other algorithms in the function approximation setting.

( 2

min )

arXiv:2009.04413v2 Announce Type: replace-cross

Abstract: SkipGram word embedding models with negative sampling, or SGN in short, is an elegant family of word embedding models. In this paper, we formulate a framework for word embedding, referred to as Word-Context Classification (WCC), that generalizes SGN to a wide family of models. The framework, which uses some ``noise examples'', is justified through theoretical analysis. The impact of noise distribution on the learning of the WCC embedding models is studied experimentally, suggesting that the best noise distribution is, in fact, the data distribution, in terms of both the embedding performance and the speed of convergence during training. Along our way, we discover several novel embedding models that outperform existing WCC models.

( 2

min )

arXiv:2009.04413v2 Announce Type: replace-cross

Abstract: SkipGram word embedding models with negative sampling, or SGN in short, is an elegant family of word embedding models. In this paper, we formulate a framework for word embedding, referred to as Word-Context Classification (WCC), that generalizes SGN to a wide family of models. The framework, which uses some ``noise examples'', is justified through theoretical analysis. The impact of noise distribution on the learning of the WCC embedding models is studied experimentally, suggesting that the best noise distribution is, in fact, the data distribution, in terms of both the embedding performance and the speed of convergence during training. Along our way, we discover several novel embedding models that outperform existing WCC models.

( 2

min )

arXiv:2501.18183v2 Announce Type: replace-cross

Abstract: We introduce a novel framework for decentralized projection-free optimization, extending projection-free methods to a broader class of upper-linearizable functions. Our approach leverages decentralized optimization techniques with the flexibility of upper-linearizable function frameworks, effectively generalizing traditional DR-submodular function optimization. We obtain the regret of $O(T^{1-\theta/2})$ with communication complexity of $O(T^{\theta})$ and number of linear optimization oracle calls of $O(T^{2\theta})$ for decentralized upper-linearizable function optimization, for any $0\le \theta \le 1$. This approach allows for the first results for monotone up-concave optimization with general convex constraints and non-monotone up-concave optimization with general convex constraints. Further, the above results for first order feedback are extended to zeroth order, semi-bandit, and bandit feedback.

( 2

min )

arXiv:2501.18183v2 Announce Type: replace-cross

Abstract: We introduce a novel framework for decentralized projection-free optimization, extending projection-free methods to a broader class of upper-linearizable functions. Our approach leverages decentralized optimization techniques with the flexibility of upper-linearizable function frameworks, effectively generalizing traditional DR-submodular function optimization. We obtain the regret of $O(T^{1-\theta/2})$ with communication complexity of $O(T^{\theta})$ and number of linear optimization oracle calls of $O(T^{2\theta})$ for decentralized upper-linearizable function optimization, for any $0\le \theta \le 1$. This approach allows for the first results for monotone up-concave optimization with general convex constraints and non-monotone up-concave optimization with general convex constraints. Further, the above results for first order feedback are extended to zeroth order, semi-bandit, and bandit feedback.

( 2

min )

arXiv:2508.15690v4 Announce Type: replace-cross

Abstract: GRAFT is a structured multimodal benchmark designed to probe how well LLMs handle instruction following, visual reasoning, and tasks requiring tight visual textual alignment. The dataset is built around programmatically generated charts and synthetically rendered tables, each paired with a carefully constructed, multi step analytical question that depends solely on what can be inferred from the image itself. Responses are formatted in structured outputs such as JSON or YAML, enabling consistent and fine grained evaluation of both reasoning processes and adherence to output specifications. The benchmark further introduces a taxonomy of reasoning operations ranging from comparison and trend identification to ranking, aggregation, proportional estimation, and anomaly detection to support a comprehensive assessment of model capabilities. Taken together, GRAFT provides a unified and scalable framework for evaluating multimodal LLMs on visually grounded, structured reasoning tasks, offering a more rigorous standard for future benchmarking efforts.

( 2

min )

arXiv:2508.15690v4 Announce Type: replace-cross

Abstract: GRAFT is a structured multimodal benchmark designed to probe how well LLMs handle instruction following, visual reasoning, and tasks requiring tight visual textual alignment. The dataset is built around programmatically generated charts and synthetically rendered tables, each paired with a carefully constructed, multi step analytical question that depends solely on what can be inferred from the image itself. Responses are formatted in structured outputs such as JSON or YAML, enabling consistent and fine grained evaluation of both reasoning processes and adherence to output specifications. The benchmark further introduces a taxonomy of reasoning operations ranging from comparison and trend identification to ranking, aggregation, proportional estimation, and anomaly detection to support a comprehensive assessment of model capabilities. Taken together, GRAFT provides a unified and scalable framework for evaluating multimodal LLMs on visually grounded, structured reasoning tasks, offering a more rigorous standard for future benchmarking efforts.

( 2

min )

arXiv:2009.04413v2 Announce Type: replace-cross

Abstract: SkipGram word embedding models with negative sampling, or SGN in short, is an elegant family of word embedding models. In this paper, we formulate a framework for word embedding, referred to as Word-Context Classification (WCC), that generalizes SGN to a wide family of models. The framework, which uses some ``noise examples'', is justified through theoretical analysis. The impact of noise distribution on the learning of the WCC embedding models is studied experimentally, suggesting that the best noise distribution is, in fact, the data distribution, in terms of both the embedding performance and the speed of convergence during training. Along our way, we discover several novel embedding models that outperform existing WCC models.

( 2

min )

arXiv:2009.04413v2 Announce Type: replace-cross

Abstract: SkipGram word embedding models with negative sampling, or SGN in short, is an elegant family of word embedding models. In this paper, we formulate a framework for word embedding, referred to as Word-Context Classification (WCC), that generalizes SGN to a wide family of models. The framework, which uses some ``noise examples'', is justified through theoretical analysis. The impact of noise distribution on the learning of the WCC embedding models is studied experimentally, suggesting that the best noise distribution is, in fact, the data distribution, in terms of both the embedding performance and the speed of convergence during training. Along our way, we discover several novel embedding models that outperform existing WCC models.

( 2

min )

arXiv:2501.18183v2 Announce Type: replace-cross

Abstract: We introduce a novel framework for decentralized projection-free optimization, extending projection-free methods to a broader class of upper-linearizable functions. Our approach leverages decentralized optimization techniques with the flexibility of upper-linearizable function frameworks, effectively generalizing traditional DR-submodular function optimization. We obtain the regret of $O(T^{1-\theta/2})$ with communication complexity of $O(T^{\theta})$ and number of linear optimization oracle calls of $O(T^{2\theta})$ for decentralized upper-linearizable function optimization, for any $0\le \theta \le 1$. This approach allows for the first results for monotone up-concave optimization with general convex constraints and non-monotone up-concave optimization with general convex constraints. Further, the above results for first order feedback are extended to zeroth order, semi-bandit, and bandit feedback.

( 2

min )

arXiv:2501.18183v2 Announce Type: replace-cross

Abstract: We introduce a novel framework for decentralized projection-free optimization, extending projection-free methods to a broader class of upper-linearizable functions. Our approach leverages decentralized optimization techniques with the flexibility of upper-linearizable function frameworks, effectively generalizing traditional DR-submodular function optimization. We obtain the regret of $O(T^{1-\theta/2})$ with communication complexity of $O(T^{\theta})$ and number of linear optimization oracle calls of $O(T^{2\theta})$ for decentralized upper-linearizable function optimization, for any $0\le \theta \le 1$. This approach allows for the first results for monotone up-concave optimization with general convex constraints and non-monotone up-concave optimization with general convex constraints. Further, the above results for first order feedback are extended to zeroth order, semi-bandit, and bandit feedback.

( 2

min )

arXiv:2509.18228v2 Announce Type: replace-cross

Abstract: We present a new 10-meter map of dominant tree species in Swedish forests accompanied by pixel-level uncertainty estimates. The tree species classification is based on spatiotemporal metrics derived from Sentinel-1 and Sentinel-2 satellite data, combined with field observations from the Swedish National Forest Inventory. We apply an extreme gradient boosting model with Bayesian optimization to relate field observations to satellite-derived features and generate the final species map. Classification uncertainty is quantified using Shannon's entropy of the predicted class probabilities, which provide a spatially explicit measure of model confidence. The final model achieved an overall accuracy of 85% (F1 score = 0.82, Matthews correlation coefficient = 0.81), and mapped species distributions showed strong agreement with official forest statistics (Spearman's rho = 0.94).

( 2

min )

arXiv:2509.18228v2 Announce Type: replace-cross

Abstract: We present a new 10-meter map of dominant tree species in Swedish forests accompanied by pixel-level uncertainty estimates. The tree species classification is based on spatiotemporal metrics derived from Sentinel-1 and Sentinel-2 satellite data, combined with field observations from the Swedish National Forest Inventory. We apply an extreme gradient boosting model with Bayesian optimization to relate field observations to satellite-derived features and generate the final species map. Classification uncertainty is quantified using Shannon's entropy of the predicted class probabilities, which provide a spatially explicit measure of model confidence. The final model achieved an overall accuracy of 85% (F1 score = 0.82, Matthews correlation coefficient = 0.81), and mapped species distributions showed strong agreement with official forest statistics (Spearman's rho = 0.94).

( 2

min )

MIT CSAIL and LIDS researchers developed a mathematically grounded system that lets soft robots deform, adapt, and interact with people and objects, without violating safety limits.

( 7

min )

MIT CSAIL and LIDS researchers developed a mathematically grounded system that lets soft robots deform, adapt, and interact with people and objects, without violating safety limits.

( 7

min )

Today, Mistral AI announced the Mistral 3 family of open-source multilingual, multimodal models, optimized across NVIDIA supercomputing and edge platforms. Mistral Large 3 is a mixture-of-experts (MoE) model — instead of firing up every neuron for every token, it only activates the parts of the model with the most impact. The result is efficiency

Read Article

( 6

min )

Today, Mistral AI announced the Mistral 3 family of open-source multilingual, multimodal models, optimized across NVIDIA supercomputing and edge platforms. Mistral Large 3 is a mixture-of-experts (MoE) model — instead of firing up every neuron for every token, it only activates the parts of the model with the most impact. The result is efficiency

Read Article

( 6

min )

At AWS re:Invent, NVIDIA and Amazon Web Services expanded their strategic collaboration with new technology integrations across interconnect technology, cloud infrastructure, open models and physical AI. As part of this expansion, AWS will support NVIDIA NVLink Fusion — a platform for custom AI infrastructure — for deploying its custom-designed silicon, including next-generation Trainium4 chips for

Read Article

( 9

min )

At AWS re:Invent, NVIDIA and Amazon Web Services expanded their strategic collaboration with new technology integrations across interconnect technology, cloud infrastructure, open models and physical AI. As part of this expansion, AWS will support NVIDIA NVLink Fusion — a platform for custom AI infrastructure — for deploying its custom-designed silicon, including next-generation Trainium4 chips for

Read Article

( 9

min )

Human Brain. Credit: NIH How does AI cure a major disease? Or, how does AI solve the national debt problem without the unbearable economic side effects? These questions are another way of saying how does AI get to superintelligence? AI is already excellent enough to generate accurate answers across knowledge areas, matching credible sources in its… Read More »Could quantum storage fine-tune AI into world models and robotics-superintelligence?

The post Could quantum storage fine-tune AI into world models and robotics-superintelligence? appeared first on Data Science Central.

( 24

min )

Human Brain. Credit: NIH How does AI cure a major disease? Or, how does AI solve the national debt problem without the unbearable economic side effects? These questions are another way of saying how does AI get to superintelligence? AI is already excellent enough to generate accurate answers across knowledge areas, matching credible sources in its… Read More »Could quantum storage fine-tune AI into world models and robotics-superintelligence?

The post Could quantum storage fine-tune AI into world models and robotics-superintelligence? appeared first on Data Science Central.

( 24

min )

Explore data, model, tensor, pipeline, and hybrid parallelism for scaling AI training across GPUs efficiently and effectively.

The post AI parallelism training strategies: Data, model, tensor and pipeline parallelism appeared first on Data Science Central.

( 21

min )

Explore data, model, tensor, pipeline, and hybrid parallelism for scaling AI training across GPUs efficiently and effectively.

The post AI parallelism training strategies: Data, model, tensor and pipeline parallelism appeared first on Data Science Central.

( 21

min )

arXiv:2512.00163v1 Announce Type: new

Abstract: Large Language Models (LLMs) have attracted significant attention for classification tasks, offering a flexible alternative to trusted classical machine learning models like LightGBM through zero-shot prompting. However, their reliability for structured tabular data remains unclear, particularly in high stakes applications like financial risk assessment. Our study systematically evaluates LLMs and generates their SHAP values on financial classification tasks. Our analysis shows a divergence between LLMs self-explanation of feature impact and their SHAP values, as well as notable differences between LLMs and LightGBM SHAP values. These findings highlight the limitations of LLMs as standalone classifiers for structured financial modeling, but also instill optimism that improved explainability mechanisms coupled with few-shot prompting will make LLMs usable in risk-sensitive domains.

( 2

min )

arXiv:2512.00163v1 Announce Type: new

Abstract: Large Language Models (LLMs) have attracted significant attention for classification tasks, offering a flexible alternative to trusted classical machine learning models like LightGBM through zero-shot prompting. However, their reliability for structured tabular data remains unclear, particularly in high stakes applications like financial risk assessment. Our study systematically evaluates LLMs and generates their SHAP values on financial classification tasks. Our analysis shows a divergence between LLMs self-explanation of feature impact and their SHAP values, as well as notable differences between LLMs and LightGBM SHAP values. These findings highlight the limitations of LLMs as standalone classifiers for structured financial modeling, but also instill optimism that improved explainability mechanisms coupled with few-shot prompting will make LLMs usable in risk-sensitive domains.

( 2

min )

arXiv:2512.00298v1 Announce Type: new

Abstract: This study analyzes the impact of heterogeneity ("Variety") in Big Data by comparing classification strategies across structured (Epsilon) and unstructured (Rest-Mex, IMDB) domains. A dual methodology was implemented: evolutionary and Bayesian hyperparameter optimization (Genetic Algorithms, Optuna) in Python for numerical data, and distributed processing in Apache Spark for massive textual corpora. The results reveal a "complexity paradox": in high-dimensional spaces, optimized linear models (SVM, Logistic Regression) outperformed deep architectures and Gradient Boosting. Conversely, in text-based domains, the constraints of distributed fine-tuning led to overfitting in complex models, whereas robust feature engineering -- specifically Transformer-based embeddings (ROBERTa) and Bayesian Target Encoding -- enabled simpler models to generalize effectively. This work provides a unified framework for algorithm selection based on data nature and infrastructure constraints.

( 3

min )

arXiv:2512.00298v1 Announce Type: new

Abstract: This study analyzes the impact of heterogeneity ("Variety") in Big Data by comparing classification strategies across structured (Epsilon) and unstructured (Rest-Mex, IMDB) domains. A dual methodology was implemented: evolutionary and Bayesian hyperparameter optimization (Genetic Algorithms, Optuna) in Python for numerical data, and distributed processing in Apache Spark for massive textual corpora. The results reveal a "complexity paradox": in high-dimensional spaces, optimized linear models (SVM, Logistic Regression) outperformed deep architectures and Gradient Boosting. Conversely, in text-based domains, the constraints of distributed fine-tuning led to overfitting in complex models, whereas robust feature engineering -- specifically Transformer-based embeddings (ROBERTa) and Bayesian Target Encoding -- enabled simpler models to generalize effectively. This work provides a unified framework for algorithm selection based on data nature and infrastructure constraints.

( 3

min )

arXiv:2512.00619v1 Announce Type: new

Abstract: Continual learning remains a fundamental challenge in artificial intelligence, with catastrophic forgetting posing a significant barrier to deploying neural networks in dynamic environments. Inspired by biological memory consolidation mechanisms, we propose a novel framework for generative replay that leverages predictive coding principles to mitigate forgetting. We present a comprehensive comparison between predictive coding-based and backpropagation-based gen- erative replay strategies, evaluating their effectiveness on task retention and transfer efficiency across multiple benchmark datasets. Our experimental results demonstrate that predictive coding-based replay achieves superior retention performance (average 15.3% improvement) while maintaining competitive transfer efficiency, suggesting that biologically-inspired mechanisms can offer principled solutions to continual learning challenges. The proposed framework provides insights into the relationship between biological memory processes and artificial learning systems, opening new avenues for neuroscience-inspired AI research.

( 2

min )

arXiv:2512.00619v1 Announce Type: new

Abstract: Continual learning remains a fundamental challenge in artificial intelligence, with catastrophic forgetting posing a significant barrier to deploying neural networks in dynamic environments. Inspired by biological memory consolidation mechanisms, we propose a novel framework for generative replay that leverages predictive coding principles to mitigate forgetting. We present a comprehensive comparison between predictive coding-based and backpropagation-based gen- erative replay strategies, evaluating their effectiveness on task retention and transfer efficiency across multiple benchmark datasets. Our experimental results demonstrate that predictive coding-based replay achieves superior retention performance (average 15.3% improvement) while maintaining competitive transfer efficiency, suggesting that biologically-inspired mechanisms can offer principled solutions to continual learning challenges. The proposed framework provides insights into the relationship between biological memory processes and artificial learning systems, opening new avenues for neuroscience-inspired AI research.

( 2

min )

arXiv:2512.00791v1 Announce Type: new

Abstract: When the distributions of the training and test data do not coincide, the problem of understanding generalization becomes considerably more complex, prompting a variety of questions. In this work, we focus on a fundamental one: Is it always optimal for the training distribution to be identical to the test distribution? Surprisingly, assuming the existence of one-way functions, we find that the answer is no. That is, matching distributions is not always the best scenario, which contrasts with the behavior of most learning methods. Nonetheless, we also show that when certain regularities are imposed on the target functions, the standard conclusion is recovered in the case of the uniform distribution.

( 2

min )

arXiv:2512.00791v1 Announce Type: new

Abstract: When the distributions of the training and test data do not coincide, the problem of understanding generalization becomes considerably more complex, prompting a variety of questions. In this work, we focus on a fundamental one: Is it always optimal for the training distribution to be identical to the test distribution? Surprisingly, assuming the existence of one-way functions, we find that the answer is no. That is, matching distributions is not always the best scenario, which contrasts with the behavior of most learning methods. Nonetheless, we also show that when certain regularities are imposed on the target functions, the standard conclusion is recovered in the case of the uniform distribution.

( 2

min )

arXiv:2512.00856v1 Announce Type: new

Abstract: Probabilistic forecasting is essential for modern risk management, allowing decision-makers to quantify uncertainty in critical systems. This paper tackles this challenge using the volatile REFIT household dataset, which is complicated by a large structural data gap. We first address this by conducting a rigorous comparative experiment to select a Seasonal Imputation method, demonstrating its superiority over linear interpolation in preserving the data's underlying distribution. We then systematically evaluate a hierarchy of models, progressing from classical baselines (SARIMA, Prophet) to machine learning (XGBoost) and advanced deep learning architectures (LSTM). Our findings reveal that classical models fail to capture the data's non-linear, regime-switching behavior. While the LSTM provided the most well-calibrated probabilistic forecast, the Temporal Fusion Transformer (TFT) emerged as the superior all-round model, achieving the best point forecast accuracy (RMSE 481.94) and producing safer, more cautious prediction intervals that effectively capture extreme volatility.

( 2

min )

arXiv:2512.00856v1 Announce Type: new

Abstract: Probabilistic forecasting is essential for modern risk management, allowing decision-makers to quantify uncertainty in critical systems. This paper tackles this challenge using the volatile REFIT household dataset, which is complicated by a large structural data gap. We first address this by conducting a rigorous comparative experiment to select a Seasonal Imputation method, demonstrating its superiority over linear interpolation in preserving the data's underlying distribution. We then systematically evaluate a hierarchy of models, progressing from classical baselines (SARIMA, Prophet) to machine learning (XGBoost) and advanced deep learning architectures (LSTM). Our findings reveal that classical models fail to capture the data's non-linear, regime-switching behavior. While the LSTM provided the most well-calibrated probabilistic forecast, the Temporal Fusion Transformer (TFT) emerged as the superior all-round model, achieving the best point forecast accuracy (RMSE 481.94) and producing safer, more cautious prediction intervals that effectively capture extreme volatility.

( 2

min )

arXiv:2512.01400v1 Announce Type: new

Abstract: Deep learning offers promising capabilities for the statistical downscaling of climate and weather forecasts, with generative approaches showing particular success in capturing fine-scale precipitation patterns. However, most existing models are region-specific, and their ability to generalize to unseen geographic areas remains largely unexplored. In this study, we evaluate the generalization performance of generative downscaling models across diverse regions. Using a global framework, we employ ERA5 reanalysis data as predictors and IMERG precipitation estimates at $0.1^\circ$ resolution as targets. A hierarchical location-based data split enables a systematic assessment of model performance across 15 regions around the world.

( 2

min )

arXiv:2512.01400v1 Announce Type: new

Abstract: Deep learning offers promising capabilities for the statistical downscaling of climate and weather forecasts, with generative approaches showing particular success in capturing fine-scale precipitation patterns. However, most existing models are region-specific, and their ability to generalize to unseen geographic areas remains largely unexplored. In this study, we evaluate the generalization performance of generative downscaling models across diverse regions. Using a global framework, we employ ERA5 reanalysis data as predictors and IMERG precipitation estimates at $0.1^\circ$ resolution as targets. A hierarchical location-based data split enables a systematic assessment of model performance across 15 regions around the world.

( 2

min )

arXiv:2512.01465v1 Announce Type: new

Abstract: Water quality monitoring is a core component of ecological environmental protection. However, due to sensor failure or other inevitable factors, data missing often exists in long-term monitoring, posing great challenges in water quality analysis. This paper proposes a Neural Tucker Convolutional Network (NTCN) model for water quality data imputation, which features the following key components: a) Encode different mode entities into respective embedding vectors, and construct a Tucker interaction tensor by outer product operations to capture the complex mode-wise feature interactions; b) Use 3D convolution to extract fine-grained spatiotemporal features from the interaction tensor. Experiments on three real-world water quality datasets show that the proposed NTCN model outperforms several state-of-the-art imputation models in terms of accuracy.

( 2

min )

arXiv:2512.01465v1 Announce Type: new

Abstract: Water quality monitoring is a core component of ecological environmental protection. However, due to sensor failure or other inevitable factors, data missing often exists in long-term monitoring, posing great challenges in water quality analysis. This paper proposes a Neural Tucker Convolutional Network (NTCN) model for water quality data imputation, which features the following key components: a) Encode different mode entities into respective embedding vectors, and construct a Tucker interaction tensor by outer product operations to capture the complex mode-wise feature interactions; b) Use 3D convolution to extract fine-grained spatiotemporal features from the interaction tensor. Experiments on three real-world water quality datasets show that the proposed NTCN model outperforms several state-of-the-art imputation models in terms of accuracy.

( 2

min )

arXiv:2512.01562v1 Announce Type: new

Abstract: Change-point detection (CPD) in high-dimensional, large-volume time series is challenging for statistical consistency, scalability, and interpretability. We introduce TimePred, a self-supervised framework that reduces multivariate CPD to univariate mean-shift detection by predicting each sample's normalized time index. This enables efficient offline CPD using existing algorithms and supports the integration of XAI attribution methods for feature-level explanations. Our experiments show competitive CPD performance while reducing computational cost by up to two orders of magnitude. In an industrial manufacturing case study, we demonstrate improved detection accuracy and illustrate the practical value of interpretable change-point insights.

( 2

min )

arXiv:2512.01562v1 Announce Type: new

Abstract: Change-point detection (CPD) in high-dimensional, large-volume time series is challenging for statistical consistency, scalability, and interpretability. We introduce TimePred, a self-supervised framework that reduces multivariate CPD to univariate mean-shift detection by predicting each sample's normalized time index. This enables efficient offline CPD using existing algorithms and supports the integration of XAI attribution methods for feature-level explanations. Our experiments show competitive CPD performance while reducing computational cost by up to two orders of magnitude. In an industrial manufacturing case study, we demonstrate improved detection accuracy and illustrate the practical value of interpretable change-point insights.

( 2

min )

arXiv:2512.00100v1 Announce Type: cross

Abstract: As COVID-19 transitions into an endemic disease that remains constantly present in the population at a stable level, monitoring its prevalence without invasive measures becomes increasingly important. In this paper, we present a deep neural network estimator for the COVID-19 daily case count based on wastewater surveillance data and other confounding factors. This work builds upon the study by Jiang, Kolozsvary, and Li (2024), which connects the COVID-19 case counts with testing data collected early in the pandemic. Using the COVID-19 testing data and the wastewater surveillance data during the period when both data were highly reliable, one can train an artificial neural network that learns the nonlinear relation between the COVID-19 daily case count and the wastewater viral RNA concentration. From a machine learning perspective, the main challenge lies in addressing temporal feature reliability, as the training data has different reliability over different time periods.

( 3

min )

arXiv:2512.00100v1 Announce Type: cross

Abstract: As COVID-19 transitions into an endemic disease that remains constantly present in the population at a stable level, monitoring its prevalence without invasive measures becomes increasingly important. In this paper, we present a deep neural network estimator for the COVID-19 daily case count based on wastewater surveillance data and other confounding factors. This work builds upon the study by Jiang, Kolozsvary, and Li (2024), which connects the COVID-19 case counts with testing data collected early in the pandemic. Using the COVID-19 testing data and the wastewater surveillance data during the period when both data were highly reliable, one can train an artificial neural network that learns the nonlinear relation between the COVID-19 daily case count and the wastewater viral RNA concentration. From a machine learning perspective, the main challenge lies in addressing temporal feature reliability, as the training data has different reliability over different time periods.

( 3

min )

arXiv:2512.00103v1 Announce Type: cross

Abstract: This work examines how three different image-based methods, VGG16, ViT-B/16, and CoAtNet-Tiny, perform in identifying depression, schizophrenia, and healthy controls using daily actigraphy records. Wrist-worn activity signals from the Psykose and Depresjon datasets were converted into 30 by 48 images and evaluated through a three-fold subject-wise split. Although all methods fitted the training data well, their behaviour on unseen data differed. VGG16 improved steadily but often settled at lower accuracy. ViT-B/16 reached strong results in some runs, but its performance shifted noticeably from fold to fold. CoAtNet-Tiny stood out as the most reliable, recording the highest average accuracy and the most stable curves across folds. It also produced the strongest precision, recall, and F1-scores, particularly for the underrepresented depression and schizophrenia classes. Overall, the findings indicate that CoAtNet-Tiny performed most consistently on the actigraphy images, while VGG16 and ViT-B/16 yielded mixed results. These observations suggest that certain hybrid designs may be especially suited for mental-health work that relies on actigraphy-derived images.

( 2

min )

arXiv:2512.00103v1 Announce Type: cross

Abstract: This work examines how three different image-based methods, VGG16, ViT-B/16, and CoAtNet-Tiny, perform in identifying depression, schizophrenia, and healthy controls using daily actigraphy records. Wrist-worn activity signals from the Psykose and Depresjon datasets were converted into 30 by 48 images and evaluated through a three-fold subject-wise split. Although all methods fitted the training data well, their behaviour on unseen data differed. VGG16 improved steadily but often settled at lower accuracy. ViT-B/16 reached strong results in some runs, but its performance shifted noticeably from fold to fold. CoAtNet-Tiny stood out as the most reliable, recording the highest average accuracy and the most stable curves across folds. It also produced the strongest precision, recall, and F1-scores, particularly for the underrepresented depression and schizophrenia classes. Overall, the findings indicate that CoAtNet-Tiny performed most consistently on the actigraphy images, while VGG16 and ViT-B/16 yielded mixed results. These observations suggest that certain hybrid designs may be especially suited for mental-health work that relies on actigraphy-derived images.

( 2

min )

arXiv:2512.00350v1 Announce Type: cross

Abstract: We introduce MedCondDiff, a diffusion-based framework for multi-organ medical image segmentation that is efficient and anatomically grounded. The model conditions the denoising process on semantic priors extracted by a Pyramid Vision Transformer (PVT) backbone, yielding a semantically guided and lightweight diffusion architecture. This design improves robustness while reducing both inference time and VRAM usage compared to conventional diffusion models. Experiments on multi-organ, multi-modality datasets demonstrate that MedCondDiff delivers competitive performance across anatomical regions and imaging modalities, underscoring the potential of semantically guided diffusion models as an effective class of architectures for medical imaging tasks.

( 2

min )

arXiv:2512.00350v1 Announce Type: cross

Abstract: We introduce MedCondDiff, a diffusion-based framework for multi-organ medical image segmentation that is efficient and anatomically grounded. The model conditions the denoising process on semantic priors extracted by a Pyramid Vision Transformer (PVT) backbone, yielding a semantically guided and lightweight diffusion architecture. This design improves robustness while reducing both inference time and VRAM usage compared to conventional diffusion models. Experiments on multi-organ, multi-modality datasets demonstrate that MedCondDiff delivers competitive performance across anatomical regions and imaging modalities, underscoring the potential of semantically guided diffusion models as an effective class of architectures for medical imaging tasks.

( 2

min )

arXiv:2504.10097v3 Announce Type: replace

Abstract: Understanding user intent is essential for situational and context-aware decision-making. Motivated by a real-world scenario, this work addresses intent predictions of smart device users in the vicinity of vehicles by modeling sequential spatiotemporal data. However, in real-world scenarios, environmental factors and sensor limitations can result in non-stationary and irregularly sampled data, posing significant challenges. To address these issues, we propose STaRFormer, a Transformer-based approach that can serve as a universal framework for sequential modeling. STaRFormer utilizes a new dynamic attention-based regional masking scheme combined with a novel semi-supervised contrastive learning paradigm to enhance task-specific latent representations. Comprehensive experiments on 56 datasets varying in types (including non-stationary and irregularly sampled), tasks, domains, sequence lengths, training samples, and applications demonstrate the efficacy of STaRFormer, achieving notable improvements over state-of-the-art approaches.

( 2

min )

arXiv:2504.10097v3 Announce Type: replace

Abstract: Understanding user intent is essential for situational and context-aware decision-making. Motivated by a real-world scenario, this work addresses intent predictions of smart device users in the vicinity of vehicles by modeling sequential spatiotemporal data. However, in real-world scenarios, environmental factors and sensor limitations can result in non-stationary and irregularly sampled data, posing significant challenges. To address these issues, we propose STaRFormer, a Transformer-based approach that can serve as a universal framework for sequential modeling. STaRFormer utilizes a new dynamic attention-based regional masking scheme combined with a novel semi-supervised contrastive learning paradigm to enhance task-specific latent representations. Comprehensive experiments on 56 datasets varying in types (including non-stationary and irregularly sampled), tasks, domains, sequence lengths, training samples, and applications demonstrate the efficacy of STaRFormer, achieving notable improvements over state-of-the-art approaches.

( 2

min )

arXiv:2508.01407v3 Announce Type: replace

Abstract: Accurate, explainable and physically credible forecasting remains a persistent challenge for multivariate time-series whose statistical properties vary across domains. We propose DORIC, a Domain-Universal, ODE-Regularized, Interpretable-Concept Transformer for Time-Series Forecasting that generates predictions through five self-supervised, domain-agnostic concepts while enforcing differentiable residuals grounded in first-principles constraints.

( 2

min )

arXiv:2508.01407v3 Announce Type: replace

Abstract: Accurate, explainable and physically credible forecasting remains a persistent challenge for multivariate time-series whose statistical properties vary across domains. We propose DORIC, a Domain-Universal, ODE-Regularized, Interpretable-Concept Transformer for Time-Series Forecasting that generates predictions through five self-supervised, domain-agnostic concepts while enforcing differentiable residuals grounded in first-principles constraints.

( 2

min )

AquaCulture Shock program, in collaboration with MIT-Scandinavia MISTI, offers international internships for AI and autonomy in aquaculture

( 7

min )

AquaCulture Shock program, in collaboration with MIT-Scandinavia MISTI, offers international internships for AI and autonomy in aquaculture

( 7

min )

For PhD student Benjamin Manning, the future of work means grasping AI’s role on our behalf while transforming and accelerating social scientific discovery.

( 5

min )

For PhD student Benjamin Manning, the future of work means grasping AI’s role on our behalf while transforming and accelerating social scientific discovery.

( 5

min )

As the Women in Machine Learning Workshop (WiML) marks its 20th annual gathering, cofounders, friends, and collaborators Jenn Wortman Vaughan and Hanna Wallach reflect on WiML’s evolution, navigating the field of ML, and their work in responsible AI.

The post Ideas: Community building, machine learning, and the future of AI appeared first on Microsoft Research.

( 43

min )

As the Women in Machine Learning Workshop (WiML) marks its 20th annual gathering, cofounders, friends, and collaborators Jenn Wortman Vaughan and Hanna Wallach reflect on WiML’s evolution, navigating the field of ML, and their work in responsible AI.

The post Ideas: Community building, machine learning, and the future of AI appeared first on Microsoft Research.

( 43

min )

Researchers worldwide rely on open-source technologies as the foundation of their work. To equip the community with the latest advancements in digital and physical AI, NVIDIA is further expanding its collection of open AI models, datasets and tools — with potential applications in virtually every research field. At NeurIPS, one of the world’s top AI

Read Article

( 9

min )

Researchers worldwide rely on open-source technologies as the foundation of their work. To equip the community with the latest advancements in digital and physical AI, NVIDIA is further expanding its collection of open AI models, datasets and tools — with potential applications in virtually every research field. At NeurIPS, one of the world’s top AI

Read Article

( 9

min )

arXiv:2511.21970v1 Announce Type: new

Abstract: This paper presents a systematic study on developing multi-template machine learning (ML) surrogate models and applying them to the inverse design of transformers (XFMRs) in radio-frequency integrated circuits (RFICs). Our study starts with benchmarking four widely used ML architectures, including MLP-, CNN-, UNet-, and GT-based models, using the same datasets across different XFMR topologies. To improve modeling accuracy beyond these baselines, we then propose a new frequency-domain self-transfer learning technique that exploits correlations between adjacent frequency bands, leading to around 30%-50% accuracy improvement in the S-parameters prediction. Building on these models, we further develop an inverse design framework based on the covariance matrix adaptation evolutionary strategy (CMA-ES) algorithm. This framework is validated using multiple impedance-matching tasks, all demonstrating fast convergence and trustworthy performance. These results advance the goal of AI-assisted specs-to-GDS automation for RFICs and provide RFIC designers with actionable tools for integrating AI into their workflows.

( 2

min )

arXiv:2511.21970v1 Announce Type: new

Abstract: This paper presents a systematic study on developing multi-template machine learning (ML) surrogate models and applying them to the inverse design of transformers (XFMRs) in radio-frequency integrated circuits (RFICs). Our study starts with benchmarking four widely used ML architectures, including MLP-, CNN-, UNet-, and GT-based models, using the same datasets across different XFMR topologies. To improve modeling accuracy beyond these baselines, we then propose a new frequency-domain self-transfer learning technique that exploits correlations between adjacent frequency bands, leading to around 30%-50% accuracy improvement in the S-parameters prediction. Building on these models, we further develop an inverse design framework based on the covariance matrix adaptation evolutionary strategy (CMA-ES) algorithm. This framework is validated using multiple impedance-matching tasks, all demonstrating fast convergence and trustworthy performance. These results advance the goal of AI-assisted specs-to-GDS automation for RFICs and provide RFIC designers with actionable tools for integrating AI into their workflows.

( 2

min )

arXiv:2511.22101v1 Announce Type: new

Abstract: The report goes through the main steps of replicating and improving the article "Adaptive Liquidity Provision in Uniswap V3 with Deep Reinforcement Learning." The replication part includes how to obtain data from the Uniswap Subgraph, details of the implementation, and comments on the results. After the replication, I propose a new structure based on the original model, which combines Mamba with DDQN and a new reward function. In this new structure, I clean the data again and introduce two new baselines for comparison. As a result, although the model has not yet been applied to all datasets, it shows stronger theoretical support than the original model and performs better in some tests.

( 2

min )

arXiv:2511.22101v1 Announce Type: new

Abstract: The report goes through the main steps of replicating and improving the article "Adaptive Liquidity Provision in Uniswap V3 with Deep Reinforcement Learning." The replication part includes how to obtain data from the Uniswap Subgraph, details of the implementation, and comments on the results. After the replication, I propose a new structure based on the original model, which combines Mamba with DDQN and a new reward function. In this new structure, I clean the data again and introduce two new baselines for comparison. As a result, although the model has not yet been applied to all datasets, it shows stronger theoretical support than the original model and performs better in some tests.

( 2

min )

arXiv:2511.22674v1 Announce Type: new

Abstract: Inspired by recent advances in large language models, foundation models have been developed for zero-shot time series forecasting, enabling prediction on datasets unseen during pretraining. These large-scale models, trained on vast collections of time series, learn generalizable representations for both point and probabilistic forecasting, reducing the need for task-specific architectures and manual tuning.

In this work, we review the main architectures, pretraining strategies, and optimization methods used in such models, and study the effect of fine-tuning after pretraining to enhance their performance on specific datasets. Our empirical results show that fine-tuning generally improves zero-shot forecasting capabilities, especially for long-term horizons.

( 2

min )

arXiv:2511.22674v1 Announce Type: new

Abstract: Inspired by recent advances in large language models, foundation models have been developed for zero-shot time series forecasting, enabling prediction on datasets unseen during pretraining. These large-scale models, trained on vast collections of time series, learn generalizable representations for both point and probabilistic forecasting, reducing the need for task-specific architectures and manual tuning.

In this work, we review the main architectures, pretraining strategies, and optimization methods used in such models, and study the effect of fine-tuning after pretraining to enhance their performance on specific datasets. Our empirical results show that fine-tuning generally improves zero-shot forecasting capabilities, especially for long-term horizons.

( 2

min )

arXiv:2511.22866v1 Announce Type: new

Abstract: Numerous graph neural network (GNN)-based algorithms have been proposed to solve graph-based combinatorial optimization problems (COPs), but methods to explain their predictions remain largely undeveloped. We introduce ARM-Explainer, a post-hoc, model-level explainer based on association rule mining, and demonstrate it on the predictions of the hybrid geometric scattering (HGS) GNN for the maximum clique problem (MCP), a canonical NP-hard graph-based COP. The eight most explanatory association rules discovered by ARM-Explainer achieve high median lift and confidence values of 2.42 and 0.49, respectively, on test instances from the TWITTER and BHOSLIB-DIMACS benchmark datasets. ARM-Explainer identifies the most important node features, together with their value ranges, that influence the GNN's predictions on these datasets. Furthermore, augmenting the GNN with informative node features substantially improves its performance on the MCP, increasing the median largest-found clique size by 22% (from 29.5 to 36) on large graphs from the BHOSLIB-DIMACS dataset.

( 2

min )

arXiv:2511.22866v1 Announce Type: new

Abstract: Numerous graph neural network (GNN)-based algorithms have been proposed to solve graph-based combinatorial optimization problems (COPs), but methods to explain their predictions remain largely undeveloped. We introduce ARM-Explainer, a post-hoc, model-level explainer based on association rule mining, and demonstrate it on the predictions of the hybrid geometric scattering (HGS) GNN for the maximum clique problem (MCP), a canonical NP-hard graph-based COP. The eight most explanatory association rules discovered by ARM-Explainer achieve high median lift and confidence values of 2.42 and 0.49, respectively, on test instances from the TWITTER and BHOSLIB-DIMACS benchmark datasets. ARM-Explainer identifies the most important node features, together with their value ranges, that influence the GNN's predictions on these datasets. Furthermore, augmenting the GNN with informative node features substantially improves its performance on the MCP, increasing the median largest-found clique size by 22% (from 29.5 to 36) on large graphs from the BHOSLIB-DIMACS dataset.

( 2

min )

arXiv:2511.22882v1 Announce Type: new

Abstract: We construct pushforward distributions via the universal covering map rho: S^3 -> L(p;q) with the goal of approximating these distributions using flows on L(p;q). We highlight that our method deletes redundancies in the case of a symmetric S^3 distribution. Using our model, we approximate the pushforwards of von Mises-Fisher-induced target densities as well as that of a Z_12-symmetric Boltzmann distribution on S^3 constructed to model benzene.

( 2

min )

arXiv:2511.22882v1 Announce Type: new

Abstract: We construct pushforward distributions via the universal covering map rho: S^3 -> L(p;q) with the goal of approximating these distributions using flows on L(p;q). We highlight that our method deletes redundancies in the case of a symmetric S^3 distribution. Using our model, we approximate the pushforwards of von Mises-Fisher-induced target densities as well as that of a Z_12-symmetric Boltzmann distribution on S^3 constructed to model benzene.

( 2

min )

arXiv:2511.23083v1 Announce Type: new

Abstract: High-capacity kernel Hopfield networks exhibit a "Ridge of Optimization" characterized by extreme stability. While previously linked to "Spectral Concentration," its origin remains elusive. Here, we analyze the network dynamics on a statistical manifold, revealing that the Ridge corresponds to the "Edge of Stability," a critical boundary where the Fisher Information Matrix becomes singular. We demonstrate that the apparent Euclidean force antagonism is a manifestation of \textit{Dual Equilibrium} in the Riemannian space. This unifies learning dynamics and capacity via the Minimum Description Length principle, offering a geometric theory of self-organized criticality.

( 2

min )

arXiv:2511.23083v1 Announce Type: new

Abstract: High-capacity kernel Hopfield networks exhibit a "Ridge of Optimization" characterized by extreme stability. While previously linked to "Spectral Concentration," its origin remains elusive. Here, we analyze the network dynamics on a statistical manifold, revealing that the Ridge corresponds to the "Edge of Stability," a critical boundary where the Fisher Information Matrix becomes singular. We demonstrate that the apparent Euclidean force antagonism is a manifestation of \textit{Dual Equilibrium} in the Riemannian space. This unifies learning dynamics and capacity via the Minimum Description Length principle, offering a geometric theory of self-organized criticality.

( 2

min )

arXiv:2511.23120v1 Announce Type: new

Abstract: Pretrained transformers provide rich, general-purpose embeddings, which are transferred to downstream tasks. However, current transfer strategies: fine-tuning and probing, either distort the pretrained geometric structure of the embeddings or lack sufficient expressivity to capture task-relevant signals. These issues become even more pronounced when supervised data are scarce. Here, we introduce Freeze, Diffuse, Decode (FDD), a novel diffusion-based framework that adapts pre-trained embeddings to downstream tasks while preserving their underlying geometric structure. FDD propagates supervised signal along the intrinsic manifold of frozen embeddings, enabling a geometry-aware adaptation of the embedding space. Applied to antimicrobial peptide design, FDD yields low-dimensional, predictive, and interpretable representations that support property prediction, retrieval, and latent-space interpolation.

( 2

min )

arXiv:2511.23120v1 Announce Type: new

Abstract: Pretrained transformers provide rich, general-purpose embeddings, which are transferred to downstream tasks. However, current transfer strategies: fine-tuning and probing, either distort the pretrained geometric structure of the embeddings or lack sufficient expressivity to capture task-relevant signals. These issues become even more pronounced when supervised data are scarce. Here, we introduce Freeze, Diffuse, Decode (FDD), a novel diffusion-based framework that adapts pre-trained embeddings to downstream tasks while preserving their underlying geometric structure. FDD propagates supervised signal along the intrinsic manifold of frozen embeddings, enabling a geometry-aware adaptation of the embedding space. Applied to antimicrobial peptide design, FDD yields low-dimensional, predictive, and interpretable representations that support property prediction, retrieval, and latent-space interpolation.

( 2

min )

arXiv:2511.23307v1 Announce Type: new

Abstract: This paper presents a framework for physics-informed learning in complex cyber-physical systems governed by differential equations with both unknown dynamics and algebraic invariants. First, we formalize the Hybrid Recurrent Physics-Informed Neural Network (HRPINN), a general-purpose architecture that embeds known physics as a hard structural constraint within a recurrent integrator to learn only residual dynamics. Second, we introduce the Projected HRPINN (PHRPINN), a novel extension that integrates a predict-project mechanism to strictly enforce algebraic invariants by design. The framework is supported by a theoretical analysis of its representational capacity. We validate HRPINN on a real-world battery prognostics DAE and evaluate PHRPINN on a suite of standard constrained benchmarks. The results demonstrate the framework's potential for achieving high accuracy and data efficiency, while also highlighting critical trade-offs between physical consistency, computational cost, and numerical stability, providing practical guidance for its deployment.

( 2

min )

arXiv:2511.23307v1 Announce Type: new

Abstract: This paper presents a framework for physics-informed learning in complex cyber-physical systems governed by differential equations with both unknown dynamics and algebraic invariants. First, we formalize the Hybrid Recurrent Physics-Informed Neural Network (HRPINN), a general-purpose architecture that embeds known physics as a hard structural constraint within a recurrent integrator to learn only residual dynamics. Second, we introduce the Projected HRPINN (PHRPINN), a novel extension that integrates a predict-project mechanism to strictly enforce algebraic invariants by design. The framework is supported by a theoretical analysis of its representational capacity. We validate HRPINN on a real-world battery prognostics DAE and evaluate PHRPINN on a suite of standard constrained benchmarks. The results demonstrate the framework's potential for achieving high accuracy and data efficiency, while also highlighting critical trade-offs between physical consistency, computational cost, and numerical stability, providing practical guidance for its deployment.

( 2

min )

arXiv:2211.10360v2 Announce Type: replace

Abstract: High fidelity design evaluation processes such as Computational Fluid Dynamics and Finite Element Analysis are often replaced with data driven surrogates to reduce computational cost in engineering design optimization. However, building accurate surrogate models still requires a large number of expensive simulations. To address this challenge, we introduce epsilon HQS, a scalable active learning strategy that leverages a student teacher framework to train deep neural networks efficiently. Unlike Bayesian AL methods, which are computationally demanding with DNNs, epsilon HQS selectively queries informative samples to reduce labeling cost. Applied to CFD, FEA, and propeller design tasks, our method achieves higher accuracy under fixed labeling cost budgets.

( 2

min )

arXiv:2211.10360v2 Announce Type: replace

Abstract: High fidelity design evaluation processes such as Computational Fluid Dynamics and Finite Element Analysis are often replaced with data driven surrogates to reduce computational cost in engineering design optimization. However, building accurate surrogate models still requires a large number of expensive simulations. To address this challenge, we introduce epsilon HQS, a scalable active learning strategy that leverages a student teacher framework to train deep neural networks efficiently. Unlike Bayesian AL methods, which are computationally demanding with DNNs, epsilon HQS selectively queries informative samples to reduce labeling cost. Applied to CFD, FEA, and propeller design tasks, our method achieves higher accuracy under fixed labeling cost budgets.

( 2

min )

arXiv:2503.07995v2 Announce Type: replace

Abstract: In this paper, we propose a method for density-based clustering in high-dimensional spaces that combines Locality-Sensitive Hashing (LSH) with the Quick Shift algorithm. The Quick Shift algorithm, known for its hierarchical clustering capabilities, is extended by integrating approximate Kernel Density Estimation (KDE) using LSH to provide efficient density estimates. The proposed approach achieves almost linear time complexity while preserving the consistency of density-based clustering.

( 2

min )

arXiv:2503.07995v2 Announce Type: replace

Abstract: In this paper, we propose a method for density-based clustering in high-dimensional spaces that combines Locality-Sensitive Hashing (LSH) with the Quick Shift algorithm. The Quick Shift algorithm, known for its hierarchical clustering capabilities, is extended by integrating approximate Kernel Density Estimation (KDE) using LSH to provide efficient density estimates. The proposed approach achieves almost linear time complexity while preserving the consistency of density-based clustering.

( 2

min )

arXiv:2311.09922v4 Announce Type: replace-cross

Abstract: We demonstrate a multiplication method based on numbers represented as set of polynomial radix 2 indices stored as an integer list. The 'polynomial integer index multiplication' method is a set of algorithms implemented in python code. We demonstrate the method to be faster than both the Number Theoretic Transform (NTT) and Karatsuba for multiplication within a certain bit range. Also implemented in python code for comparison purposes with the polynomial radix 2 integer method. We demonstrate that it is possible to express any integer or real number as a list of integer indices, representing a finite series in base two. The finite series of integer index representation of a number can then be stored and distributed across multiple CPUs / GPUs. We show that operations of addition and multiplication can be applied as two's complement additions operating on the index integer representations and can be fully distributed across a given CPU / GPU architecture. We demonstrate fully distributed arithmetic operations such that the 'polynomial integer index multiplication' method overcomes the current limitation of parallel multiplication methods. Ie, the need to share common core memory and common disk for the calculation of results and intermediate results.

( 3

min )

arXiv:2311.09922v4 Announce Type: replace-cross

Abstract: We demonstrate a multiplication method based on numbers represented as set of polynomial radix 2 indices stored as an integer list. The 'polynomial integer index multiplication' method is a set of algorithms implemented in python code. We demonstrate the method to be faster than both the Number Theoretic Transform (NTT) and Karatsuba for multiplication within a certain bit range. Also implemented in python code for comparison purposes with the polynomial radix 2 integer method. We demonstrate that it is possible to express any integer or real number as a list of integer indices, representing a finite series in base two. The finite series of integer index representation of a number can then be stored and distributed across multiple CPUs / GPUs. We show that operations of addition and multiplication can be applied as two's complement additions operating on the index integer representations and can be fully distributed across a given CPU / GPU architecture. We demonstrate fully distributed arithmetic operations such that the 'polynomial integer index multiplication' method overcomes the current limitation of parallel multiplication methods. Ie, the need to share common core memory and common disk for the calculation of results and intermediate results.

( 3

min )

arXiv:2405.00642v4 Announce Type: replace-cross

Abstract: Analyzing neural network dynamics via stochastic gradient descent (SGD) is crucial to building theoretical foundations for deep learning. Previous work has analyzed structured inputs within the \textit{hidden manifold model}, often under the simplifying assumption of a Gaussian distribution. We extend this framework by modeling inputs as Gaussian mixtures to better represent complex, real-world data. Through empirical and theoretical investigation, we demonstrate that with proper standardization, the learning dynamics converges to the behavior seen in the simple Gaussian case. This finding exhibits a form of universality, where diverse structured distributions yield results consistent with Gaussian assumptions, thereby strengthening the theoretical understanding of deep learning models.

( 2

min )

arXiv:2405.00642v4 Announce Type: replace-cross

Abstract: Analyzing neural network dynamics via stochastic gradient descent (SGD) is crucial to building theoretical foundations for deep learning. Previous work has analyzed structured inputs within the \textit{hidden manifold model}, often under the simplifying assumption of a Gaussian distribution. We extend this framework by modeling inputs as Gaussian mixtures to better represent complex, real-world data. Through empirical and theoretical investigation, we demonstrate that with proper standardization, the learning dynamics converges to the behavior seen in the simple Gaussian case. This finding exhibits a form of universality, where diverse structured distributions yield results consistent with Gaussian assumptions, thereby strengthening the theoretical understanding of deep learning models.

( 2

min )

arXiv:2510.09042v2 Announce Type: replace-cross

Abstract: In this work, we propose a meta-learning-based Koopman modeling and predictive control approach for nonlinear systems with parametric uncertainties. An adaptive deep meta-learning-based modeling approach, called Meta Adaptive Koopman Operator (MAKO), is proposed. Without knowledge of the parametric uncertainty, the proposed MAKO approach can learn a meta-model from a multi-modal dataset and efficiently adapt to new systems with previously unseen parameter settings by using online data. Based on the learned meta Koopman model, a predictive control scheme is developed, and the stability of the closed-loop system is ensured even in the presence of previously unseen parameter settings. Through extensive simulations, our proposed approach demonstrates superior performance in both modeling accuracy and control efficacy as compared to competitive baselines.

( 2

min )

arXiv:2510.09042v2 Announce Type: replace-cross

Abstract: In this work, we propose a meta-learning-based Koopman modeling and predictive control approach for nonlinear systems with parametric uncertainties. An adaptive deep meta-learning-based modeling approach, called Meta Adaptive Koopman Operator (MAKO), is proposed. Without knowledge of the parametric uncertainty, the proposed MAKO approach can learn a meta-model from a multi-modal dataset and efficiently adapt to new systems with previously unseen parameter settings by using online data. Based on the learned meta Koopman model, a predictive control scheme is developed, and the stability of the closed-loop system is ensured even in the presence of previously unseen parameter settings. Through extensive simulations, our proposed approach demonstrates superior performance in both modeling accuracy and control efficacy as compared to competitive baselines.

( 2

min )

arXiv:2511.23083v1 Announce Type: cross

Abstract: High-capacity kernel Hopfield networks exhibit a "Ridge of Optimization" characterized by extreme stability. While previously linked to "Spectral Concentration," its origin remains elusive. Here, we analyze the network dynamics on a statistical manifold, revealing that the Ridge corresponds to the "Edge of Stability," a critical boundary where the Fisher Information Matrix becomes singular. We demonstrate that the apparent Euclidean force antagonism is a manifestation of \textit{Dual Equilibrium} in the Riemannian space. This unifies learning dynamics and capacity via the Minimum Description Length principle, offering a geometric theory of self-organized criticality.

( 2

min )

arXiv:2511.23083v1 Announce Type: cross

Abstract: High-capacity kernel Hopfield networks exhibit a "Ridge of Optimization" characterized by extreme stability. While previously linked to "Spectral Concentration," its origin remains elusive. Here, we analyze the network dynamics on a statistical manifold, revealing that the Ridge corresponds to the "Edge of Stability," a critical boundary where the Fisher Information Matrix becomes singular. We demonstrate that the apparent Euclidean force antagonism is a manifestation of \textit{Dual Equilibrium} in the Riemannian space. This unifies learning dynamics and capacity via the Minimum Description Length principle, offering a geometric theory of self-organized criticality.

( 2

min )

arXiv:2405.00642v4 Announce Type: replace

Abstract: Analyzing neural network dynamics via stochastic gradient descent (SGD) is crucial to building theoretical foundations for deep learning. Previous work has analyzed structured inputs within the \textit{hidden manifold model}, often under the simplifying assumption of a Gaussian distribution. We extend this framework by modeling inputs as Gaussian mixtures to better represent complex, real-world data. Through empirical and theoretical investigation, we demonstrate that with proper standardization, the learning dynamics converges to the behavior seen in the simple Gaussian case. This finding exhibits a form of universality, where diverse structured distributions yield results consistent with Gaussian assumptions, thereby strengthening the theoretical understanding of deep learning models.

( 2

min )

arXiv:2405.00642v4 Announce Type: replace

Abstract: Analyzing neural network dynamics via stochastic gradient descent (SGD) is crucial to building theoretical foundations for deep learning. Previous work has analyzed structured inputs within the \textit{hidden manifold model}, often under the simplifying assumption of a Gaussian distribution. We extend this framework by modeling inputs as Gaussian mixtures to better represent complex, real-world data. Through empirical and theoretical investigation, we demonstrate that with proper standardization, the learning dynamics converges to the behavior seen in the simple Gaussian case. This finding exhibits a form of universality, where diverse structured distributions yield results consistent with Gaussian assumptions, thereby strengthening the theoretical understanding of deep learning models.

( 2

min )

arXiv:2503.07995v2 Announce Type: replace-cross

Abstract: In this paper, we propose a method for density-based clustering in high-dimensional spaces that combines Locality-Sensitive Hashing (LSH) with the Quick Shift algorithm. The Quick Shift algorithm, known for its hierarchical clustering capabilities, is extended by integrating approximate Kernel Density Estimation (KDE) using LSH to provide efficient density estimates. The proposed approach achieves almost linear time complexity while preserving the consistency of density-based clustering.

( 2

min )

arXiv:2503.07995v2 Announce Type: replace-cross

Abstract: In this paper, we propose a method for density-based clustering in high-dimensional spaces that combines Locality-Sensitive Hashing (LSH) with the Quick Shift algorithm. The Quick Shift algorithm, known for its hierarchical clustering capabilities, is extended by integrating approximate Kernel Density Estimation (KDE) using LSH to provide efficient density estimates. The proposed approach achieves almost linear time complexity while preserving the consistency of density-based clustering.

( 2

min )

Black Friday is leveling up. Get ready to score one of the biggest deals of the season — 50% off the first three months of a new GeForce NOW Ultimate membership. That’s GeForce RTX 5080-class power in the cloud for half the price. The NVIDIA Blackwell RTX upgrade is now fully live across all servers

Read Article

( 6

min )

Black Friday is leveling up. Get ready to score one of the biggest deals of the season — 50% off the first three months of a new GeForce NOW Ultimate membership. That’s GeForce RTX 5080-class power in the cloud for half the price. The NVIDIA Blackwell RTX upgrade is now fully live across all servers

Read Article

( 6

min )

arXiv:2511.21118v1 Announce Type: new